The Allen Institute intends to broaden Satlas to include more map types, such as one that shows the different crops grown throughout the world.

The Allen Institute for AI has created a brand-new tool called Satlas that has shown a special map of global tree coverage and renewable energy (RE) projects. The application utilizes satellite photos from the Sentinel-2 satellites of the European Space Agency in order to enhance photographs taken from space.

The remedy? a function known as “Super-Resolution.” In order to produce high-resolution photos, it basically employs deep learning models to fill in features, like what buildings would look like.

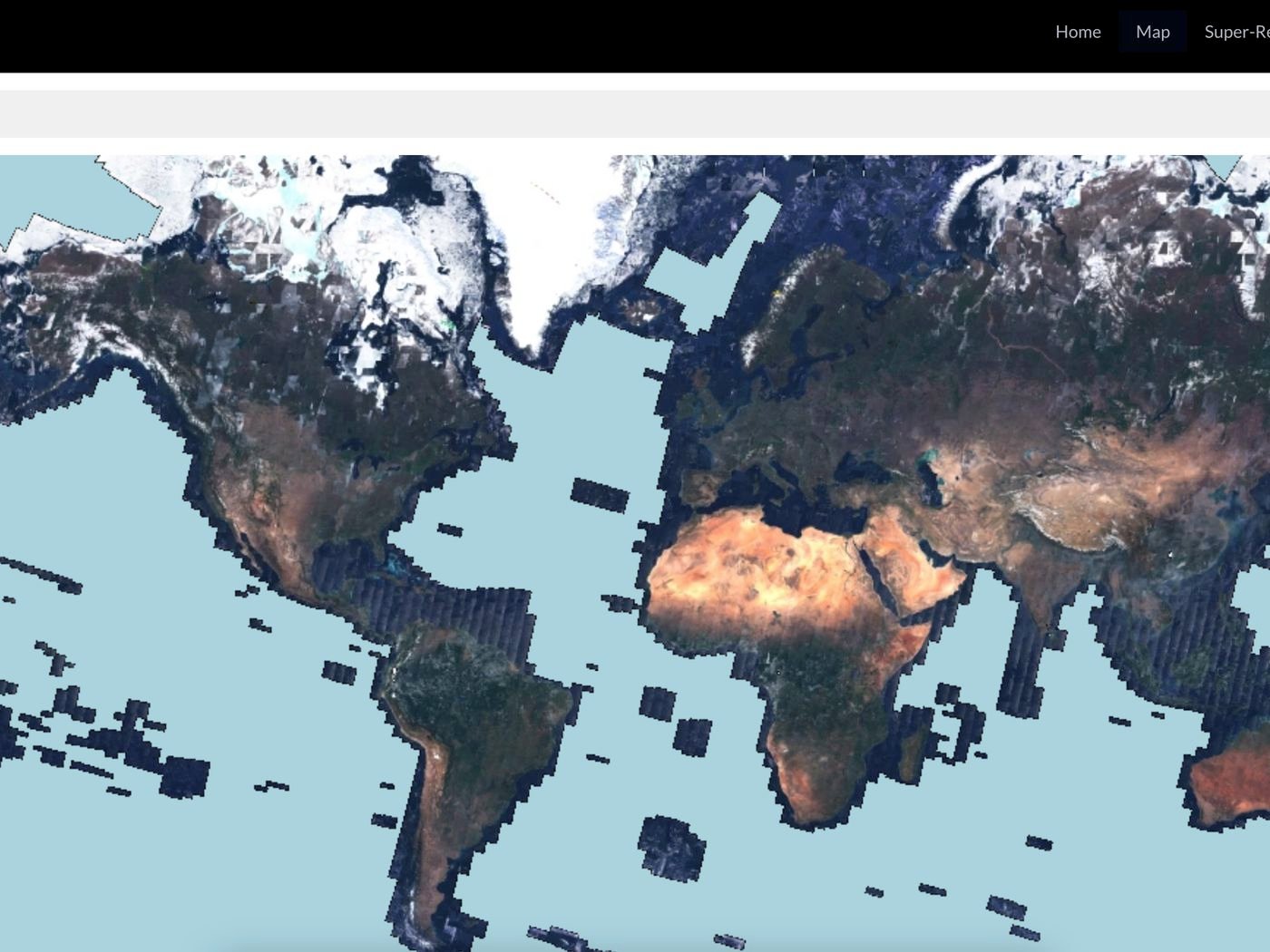

For the time being, Satlas concentrates on global forest cover and RE projects. Sentinel-2-monitored regions of the earth are included in the data, which is updated each month. With the exception of some of Antarctica and vast open oceans, that covers the majority of the planet.

Along with onshore and offshore wind turbines, it displays solar farms. It may also be used to track historical changes in the amount of tree canopy. These findings are very important for legislators who are trying to combat climate change and other environmental objectives. But according to the Allen Institute, there has never been a public tool this complete and cost-free.

This, according to its designers, is possibly one of the earliest instances of super-resolution on a global map. There are undoubtedly a few kinks left to iron out. As with other generative AI models, Satlas is still susceptible to “hallucination.”

“You can either call it hallucination or poor accuracy, but it was drawing buildings in funny ways,” claims Ani Kembhavi, senior director of computer vision at the Allen Institute. The structure may be rectangular, but the model may have misinterpreted it as trapezoidal or another shape.

That could be as a result of the model’s poor ability to account for variations in regional architectural styles. Placing vehicles where the model believes they should be based on the training pictures is another classic example of hallucination.

The Allen Institute team had to spend countless hours manually labeling 36,000 wind turbines, 7,000 offshore platforms, 4,000 solar farms, and 3,000 tree cover percentages in order to create Satlas. They developed the deep learning models in this manner, teaching them to recognize certain traits on their own.

They gave the models a large number of low-resolution photos of the same location captured at various times to achieve super-resolution. In order to forecast sub-pixel features in the high-resolution photos it creates, the model makes use of those images.

The Allen Institute intends to broaden Satlas to include more map types, such as one that shows the different crops grown throughout the world.

Our intention, according to Kembhavi, “was to sort of build a foundation model for monitoring our planet.” In order to enable other scientists to research the consequences of climate change and other events occurring on Earth, we will develop this basic model, fine-tune it for specific jobs, and then make these AI forecasts available to them.