The Biden administration is gearing up to introduce fresh regulations aimed at curtailing the export of advanced AI models.

In a bid to fortify America’s position in the global AI race and mitigate potential threats posed by adversarial nations, the Biden administration is gearing up to introduce fresh regulations aimed at curtailing the export of advanced AI models.

These models, which form the backbone of artificial intelligence systems such as ChatGPT, are poised to come under stricter scrutiny as the Commerce Department contemplates measures to rein in their dissemination, according to sources familiar with the matter.

The proposed regulatory push, if implemented, would primarily target proprietary or closed-source AI models, characterized by their opaque nature wherein both the software and the underlying training data are shrouded in secrecy. This move signifies a strategic maneuver to counteract the proliferation of cutting-edge AI technologies to countries like China and Russia, which have been identified as potential adversaries in the AI landscape.

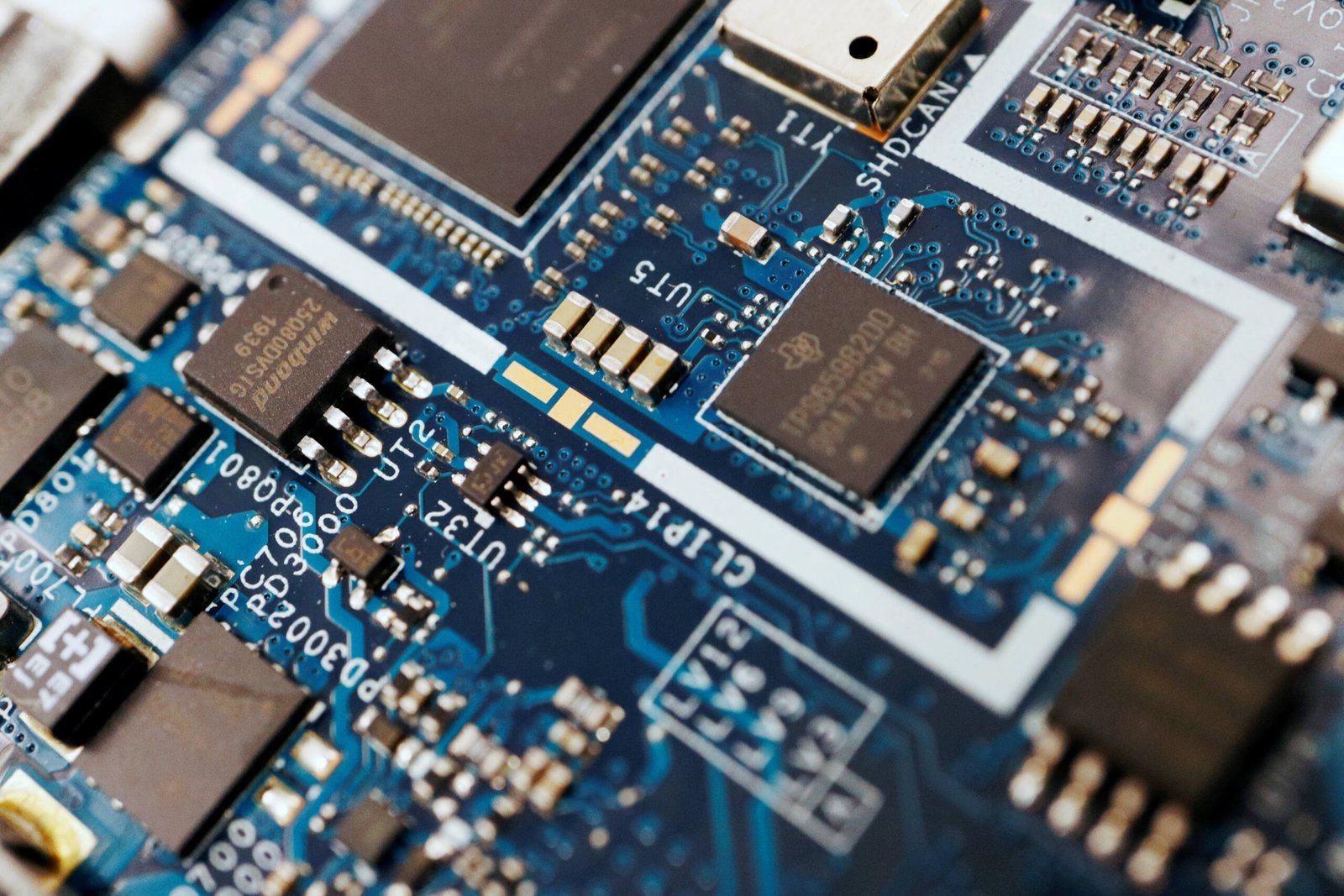

Over the past two years, the U.S. has instituted various measures to impede the export of sophisticated AI hardware components, particularly AI chips, to China, in a concerted effort to stymie Beijing’s strides in military AI applications. However, regulating the export of AI models poses distinct challenges owing to the rapid pace of innovation in the sector.

The Commerce Department refrained from commenting on the developments, while the Russian Embassy in Washington remained silent in response to inquiries. Conversely, the Chinese Embassy criticized the proposed measures as coercive and unilateral, vowing to take necessary actions to safeguard its interests.

At present, major U.S. tech giants such as Microsoft, OpenAI, Google DeepMind, and Anthropic dominate the landscape of closed-source AI model development. These entities have crafted some of the most powerful AI models, raising concerns among government and private-sector researchers regarding the potential misuse of such technologies by hostile actors.

Of particular concern are scenarios where AI models could be exploited to orchestrate aggressive cyberattacks or even facilitate the creation of bioweapons—a notion underscored by recent findings linking AI advancements to the proliferation of cyber threats and the potential for biological weapon development.

To address these apprehensions, the U.S. government is contemplating export controls targeting nations known for adversarial cyber activities, including Russia, China, North Korea, and Iran. The proposed regulations could leverage a computing power threshold as a determinant for subjecting AI models to export restrictions, a move aligned with the principles outlined in the AI executive order issued in October.

However, implementing effective export controls on AI models is fraught with complexities. The dynamic nature of AI development, coupled with the proliferation of open-source models, presents formidable challenges for regulators striving to delineate clear criteria for control measures.

Despite these challenges, the Biden administration remains steadfast in its commitment to bolstering national security in the realm of AI. By exploring regulatory avenues to safeguard advanced AI technologies, the administration aims to stay ahead in the global AI race while mitigating potential risks posed by hostile actors.

As the landscape of AI continues to evolve, policymakers face an uphill battle in striking a balance between innovation and security, with the efficacy of export controls poised to shape the trajectory of AI development in the years to come.