Researchers from Rice University’s computer science department have identified biases within widely used machine learning tools crucial for immunotherapy research.

In a groundbreaking study, researchers from Rice University’s computer science department have identified biases within widely used machine learning tools crucial for immunotherapy research. The study, led by Ph.D. students Anja Conev, Romanos Fasoulis, and Sarah Hall-Swan, alongside faculty members Rodrigo Ferreira and Lydia Kavraki, sheds light on the significant impact of skewed data on the accuracy of predictive algorithms.

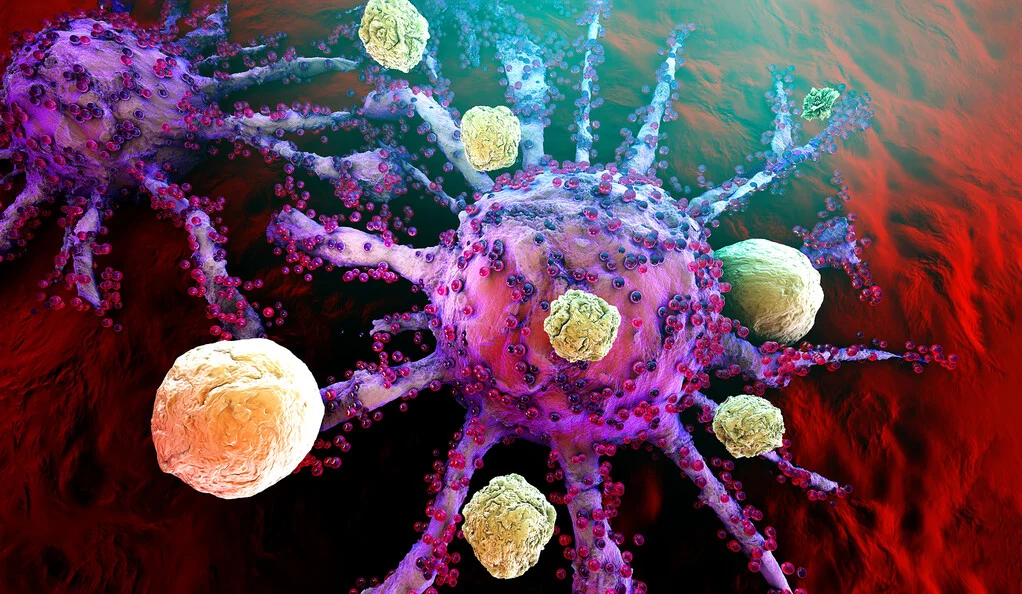

Immunotherapy research focuses on leveraging the body’s immune system to combat diseases, particularly cancer. Central to this research is understanding peptide-human leukocyte antigen (pHLA) binding, where peptides bind with proteins encoded by the HLA gene, crucial for immune response activation.

The team discovered that the data used to train machine learning models for predicting pHLA binding disproportionately represents higher-income communities geographically. This discrepancy poses a significant challenge as it could lead to the development of immunotherapies that are less effective for individuals from lower-income backgrounds.

According to Fasoulis, each individual’s genetic makeup, particularly variations in HLA alleles, is critical for personalized immunotherapy effectiveness. However, biased machine learning models may fail to account for these differences, resulting in therapies that are not universally effective across diverse populations.

The study challenges the notion of “pan-allele” predictors, which claim to extrapolate data for alleles not present in the training dataset. Conev emphasizes that their research aimed to debunk this idea by assessing whether these predictors truly work for data from lower-income populations.

Their analysis of publicly available pHLA binding prediction data confirmed their hypothesis of bias, highlighting the urgent need for a more inclusive approach to machine learning in immunotherapy research. Ferreira emphasized the necessity of considering data within its social context to address bias effectively.

Kavraki underscored the importance of accurate tools in clinical settings, particularly in personalized cancer immunotherapies, where biased algorithms could have severe implications. She stressed the need to raise awareness within the research community about the challenges of obtaining unbiased datasets.

Despite the biases identified, Conev expressed optimism about the availability of publicly accessible data for scrutiny. The team hopes their findings will catalyze future research towards developing more inclusive and effective immunotherapies that benefit diverse populations.

The study by Rice University researchers marks a significant step towards addressing bias in machine learning tools vital for advancing immunotherapy research, ultimately paving the way for more equitable healthcare solutions.